I got a chance to put the txshield demo s/w onto the calibrator and calibrate it up. While at it, I added the ability to display real world units (Volts, Amps, Watts etc.) instead of raw A/D units. The calibration multipliers literally get applied right at the final printf, everything up until then is done in raw A/D units. The multipliers live in calib.h, and if you’d rather stick to raw A/D units, you can just set them all to 1. Here’s what they look like for my specimen:

//

// Change these all to 1.0 if you want raw A/D units

//

const float VCAL = 0.24119479813;

const float ICAL[MAX_CHANNELS] = {

0.04874034071,

0.04897653045,

0.04883745917,

0.01989279489

};

const int ADC_LAG = 269; // ~4.8 degrees

I’ve got 3x SCT013s (100A:50mA) plugged into channels 0-2, and an SCT006 (20A:25mA) plugged into channel 3. I’m using the standard 33R shunts onboard the shield (which is probably a bit of a stretch for the SCT006), and a locally sourced 9VAC wall-wart for the VT. All calibration was done at 230V, 10A, 50Hz.

The nominal multiplier for the SCT006 is:

I / 4096 * 3.3 / 33 / 0.025 * 20, or I * 0.01953125 (c.f. 0.01989279489 calibrated)

The nominal multiplier for the SCT013 is:

I / 4096 * 3.3 / 33 / 0.05 * 100, or I * 0.048828125 (c.f. 0.04883745917 calibrated)

The nominal V multiplier is a bit tricker due to the wide range of output voltages, but if you start with the effective divider of your VT you can get pretty close. My VT has a divider very close to 23, i.e. when I put 230V in I get 10V out. Using that, the nominal multiplier for V is:

V / 4096 * 3.3 * 23 * 13, or V * 0.240894 (c.f. 0.24119479813 calibrated)

Calibrate V and I and you get Power for free.

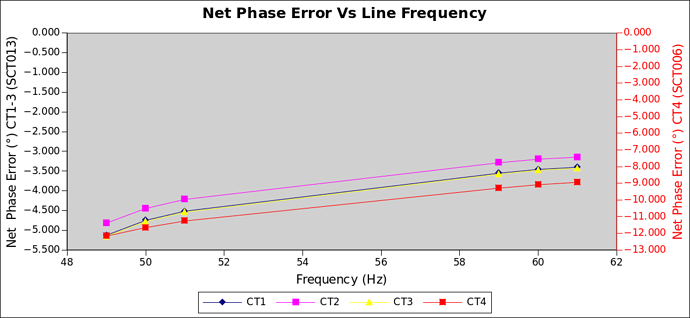

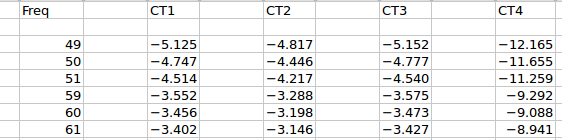

Phase error was calibrated at 90°, the 3 SCT013s called for a correction of 4.866°, 4.409° and 4.873° respectively, net… i.e. relative to the VT (I’ve no idea how much each contributes). The SCT006 called for a correction of 11.599°. Since I’m using the simplistic ADC lag technique to calibrate away phase error, it’s a one size fits all situation and I went with a 269 usec lag (or ~4.8°). The poor showing of the SCT006 measurements show just how vital per-channel phase correction is (but we already knew that). It got less than half of the phase error correction it needed and it shows. I tested the calibration at 3 different phase settings: purely resistive, PF of 0.5, purely reactive.

Calibrator set to 230V, 10A, 0° phase shift (expected Preal = 2300W):

CPU temp: 39C, Vdda: 3306mV

0: Vrms: 230.05, Irms: 10.00, Papp: 2300.65, Preal: 2296.65, PF: 0.998

1: Vrms: 230.05, Irms: 9.99, Papp: 2299.27, Preal: 2296.31, PF: 0.999

2: Vrms: 230.05, Irms: 9.99, Papp: 2298.48, Preal: 2295.17, PF: 0.999

3: Vrms: 230.05, Irms: 10.00, Papp: 2300.67, Preal: 2282.09, PF: 0.992

Calibrator set to 230V, 10A, 60° phase shift (expected Preal = 1150W):

CPU temp: 39C, Vdda: 3302mV

0: Vrms: 229.96, Irms: 10.00, Papp: 2299.63, Preal: 1150.86, PF: 0.500

1: Vrms: 229.96, Irms: 10.00, Papp: 2300.39, Preal: 1155.70, PF: 0.502

2: Vrms: 229.96, Irms: 10.00, Papp: 2299.46, Preal: 1148.40, PF: 0.499

3: Vrms: 229.96, Irms: 10.00, Papp: 2299.84, Preal: 899.04, PF: 0.391

Calibrator set to 230V, 10A, 90° phase shift (expected Preal = 0W):

CPU temp: 39C, Vdda: 3304mV

0: Vrms: 230.06, Irms: 10.00, Papp: 2299.88, Preal: 2.55, PF: 0.001

1: Vrms: 230.06, Irms: 10.00, Papp: 2300.57, Preal: 7.79, PF: 0.003

2: Vrms: 230.06, Irms: 10.00, Papp: 2299.95, Preal: 0.16, PF: 0.000

3: Vrms: 230.05, Irms: 10.00, Papp: 2300.53, Preal: -279.61, PF: -0.122

txshield_demo_7.tar.gz (884.3 KB)