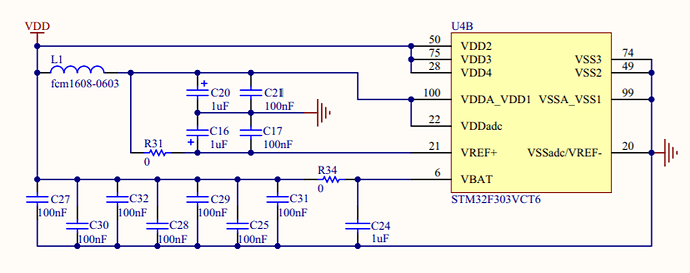

Removing R31 and adding a 2.048V voltage reference should be possible here

it’s beyond my knowledge to know whether C16 or C17 need removing/replacing, the data sheet certainly says a cap is required, but I do not know what the optimum values might be.

I guess the Vref pin would also need connecting to one end of a pair of identical resistors connected in series from Gnd so that voltage divider can provide the voltage ref for the op amp midrail at 1.024v.

The Discovery boards do not have the Arduino connectors, but that would not be an issue if we are making something with more CT’s, it can fit the Discovery conns.

The Discovery is a couple of quid cheaper than the Nucleo144 boards, and has a “STM32F303VCT6 microcontroller featuring 256-Kbyte Flash memory, 48-Kbyte RAM in an LQFP100 package” So whilst it has a smaller flash and SRAM, it does have all 39 ADC channels accessible and the ability to use an external Vref (by hacking the proto board).

I do not see any other obvious differences in the MCU (I’ve not checked every feature), the board however is radically different, it still has a STlink programmer, and it also has a compass, motions detector and gyroscope (not that an energy monitor has much use for any of those), but they might be fun to play with once the monitor is built