That’s the one I used in those early stm32 emonTX shield experiments last year. The one I have (single sample) has a V ratio of pretty much exactly 23. 230V in gives 10V out. I’m not sure how you turn that into a Vcal value, but hopefully you can.

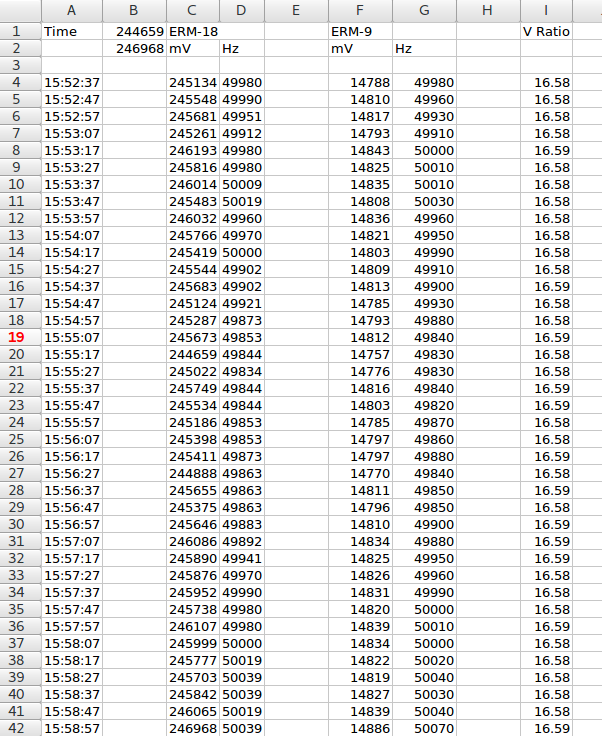

More recently I’ve been doing some dynamic range tests on my energy monitor. It’s designed for direct grid connection with an upper limit of about 270Vrms. I’ve long known it can easily measure all the way down to 90Vrms without changing the dividers, but I was curious just how low that could go. I happened to have one of these at hand so hooked up two monitors sync’d to each other within a few mains cycles, one connected to the mains, the other connected to the output of that transformer. The results were impressive; with 24-bit ADCs you can accurately measure from ~14V up to ~270V with the one set of dividers. But back on this topic, it showed the ratio for that transformer is about 16.58 (again just a single sample).