Made a lot of progress on this project in the last few weeks. First, got a new board that incorporates whatnick’s suggestion of using double headers to accommodate both the 0.9 and newer 1.0 nodeMCU. Also added an MCP23S17 16 port GPIO expander that drives two leds and offers 14 digital I/O ports. Lowered the bias resistors to 4.7K and now runs solid at about 31.8K sample pairs per second. Results seem to match my revenue grade meters within a couple percent.

The device is now called IoTaWatt - Internet of Things appliance Watt meter.

The SDcard is working well. Now the device configuration including the cloud server info and CT configuration is kept in a Json file on the SDcard. The server info including type (emonCMS for now), URL, and apiKEY are kept there, as well as the reporting frequency etc.

Will be storing the feed data and derivatives on the SDcard in the near future.

The big piece left to do is to build an AJAX application to view and modify the configuration file.

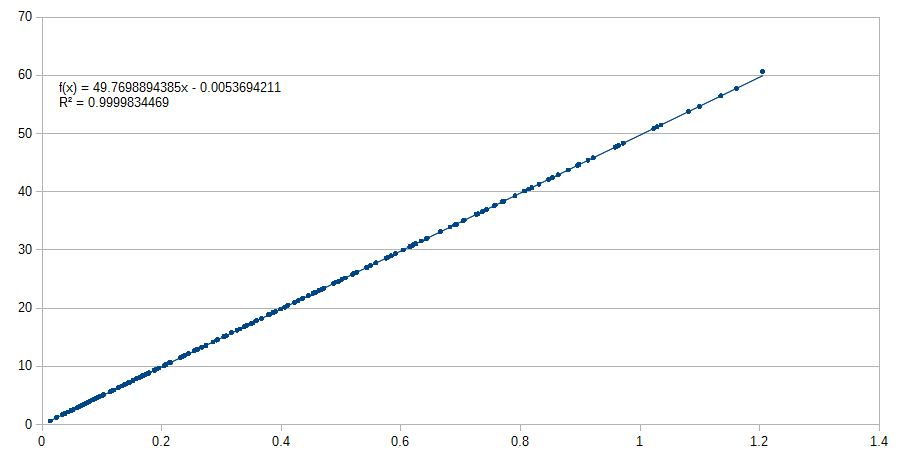

Accuracy has been the push in the last few weeks. Built a test rig that is driven by the device itself (reason for the GPIO chip). It provides an accurate calibration factor for a CT. Using a 6V AC power supply, a bank of resistors, and a set of current multiplier loops, the thing subjects a CT to up to 225 different current values from 500ma to 75A and records the CT output. Here’s the output for an YHDC SCT013-050:

The trend line regression yields the Amps per Volt that should be used to calibrate the CT. (49.7698…or 49.8). As you can see, linearity is good throughout its range.

I’ve tested several of each that I have, including some larger mains type CTs. I’ve got a few smaller doughnut CTs coming in that I should be able to add to the Json database for easy configuration.