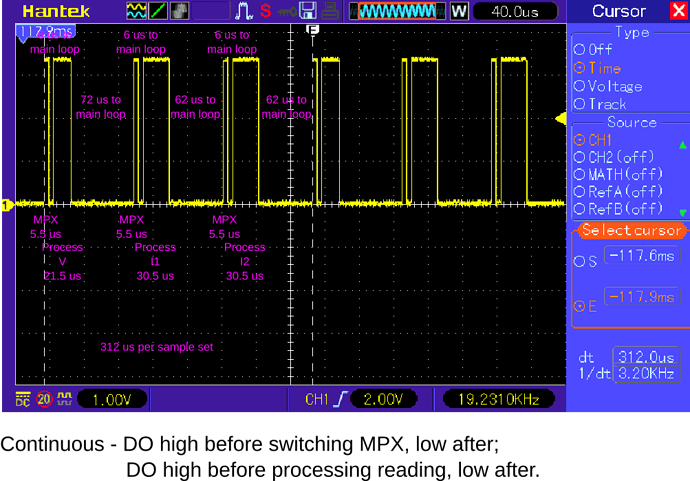

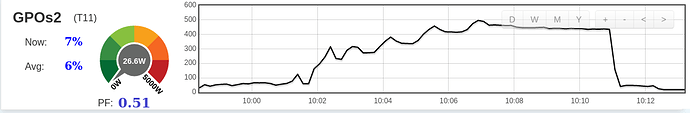

Thanks @Robert.Wall …I understand that. I guess what I didn’t expect, is that for my test cases, it seems like ALL loads are steady (obviously resistive loads like crock pots, compressor, hair dryer, fans)…and it seems like it is very easy to obtain accurate instantaneous power measurements at high sample rates AND at “lower than high” sample rates. My “sampling scheme” goal is to take a minimum number of samples necessary to get a TBD accuracy. ![]() But I think I jumped the gun in a couple of those previous posts and would like to back up a bit.

But I think I jumped the gun in a couple of those previous posts and would like to back up a bit.

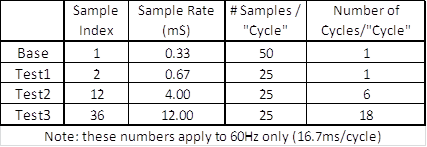

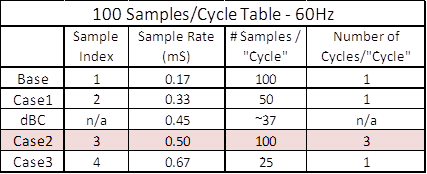

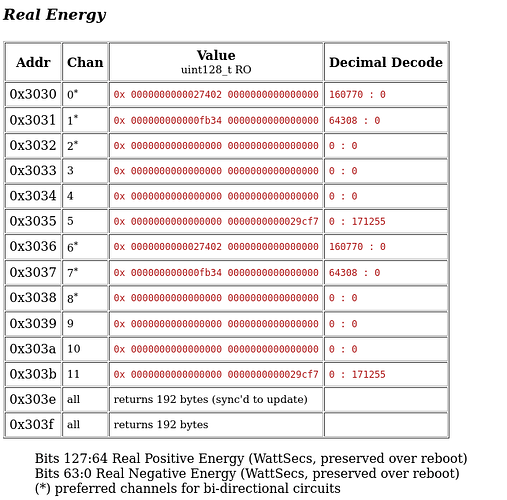

I didn’t explain that table I posted well at all. For the given sample rates, it actually provides the means to ensure this doesn’t happen. However, I didn’t post the entire table, and my explanation was terrible. But I will revisit that a bit later.

Robert, this is easily solved by ensuring the sampling is not done sequentially (which presents the problem you point out), but more intermittingly: consecutive samples spaced ~3/4 of a cycle apart (best), or various versions of that…even ~1/4 cycle spacing eliminates most of this. But this “example” leads to a question…

Is your concern over the inaccuracy based on a single occurrence of whatever waveform being measured is turned off at the 75% point of a particular “second” (which seems to be somewhat of a wash in a 10-second sample), or is the concern that a device being sampled will be operating consistently (harmonically?) in that fashion and you will accumulate error over a longer period of time?

How does the emonLIB deal with a case where it samples a signal for 200ms and then “something” turns off and is not recorded for the next 9.8 seconds?

Also, I have no clue as to the answer for this: for power monitoring, what is “good enough”? I know that “better” is always better, so more specifically…what are the tolerances/goals for emonLib and emonLibCM in accurately accumulating power usage?

Thanks again for your help, Robert…feel free to cut me loose at any time and let me flounder away, haha.