So I had a bash at this today and successfully created both phpfina and phptimeseries feeds for each of the 8 variables by using the pre-formatted csv file attached to my last post and a simple bash script.

Firstly though I used the input api to create some inputs so I could then create the process lists including setting up the feeds. For each input I added a log to feed - phptimeseries and also a phpfina with a 1hour interval.

For the phpfina feed to work beyond the 48hr restiction I also edited that “48hr” line in the feed engine code to a more liberal 1000 days temporarily $end = $now+(3600*48*500); and restarted apache2.

Then just ran the attached script firstly on a small subset, then on the remainder. It only took a couple of minutes and it was done, all 8760 frames (70080 datapoints).

Here is a snippet of screen output, it is the final 2 batches so only the first is a full batch of 100 frames. (I’ve edited out my apikey though).

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 2 100 2 0 0 1 0 0:00:02 0:00:01 0:00:01 1

http://localhost/input/bulk.json?data=[[1514296800,4,325,101,13.9,4.6,299.59,19.534,593.214,567.136],[1514300400,4,65,115,12.8,4.1,153.136,15.24,311.321,292.203],[1514304000,4,101,25,11.7,3.6,53.991,11.643,100.379,85.698],[1514307600,4,0,0,8.3,2.6,0,8.3,0,0],[1514311200,4,0,0,7.8,2.1,0,7.8,0,0],[1514314800,4,0,0,9.4,4.1,0,9.4,0,0],[1514318400,4,0,0,10,5.2,0,10,0,0],[1514322000,4,0,0,8.9,5.7,0,8.9,0,0],[1514325600,4,0,0,8.9,4.1,0,8.9,0,0],[1514329200,4,0,0,7.2,6.7,0,7.2,0,0],[1514332800,4,0,0,5.6,5.2,0,5.6,0,0],[1514336400,4,0,0,4.4,5.2,0,4.4,0,0],[1514340000,4,0,0,3.3,5.2,0,3.3,0,0],[1514343600,4,0,0,2.8,6.2,0,2.8,0,0],[1514347200,4,0,0,2.2,5.7,0,2.2,0,0],[1514350800,4,0,0,1.1,5.7,0,1.1,0,0],[1514354400,4,0,0,0,4.6,0,0,0,0],[1514358000,4,177,11,-0.6,3.1,49.81,-1.145,85.709,71.312],[1514361600,4,646,27,1.1,4.6,253.398,4.877,480.251,457.104],[1514365200,4,647,55,1.7,5.7,394.636,7.897,805.708,773.607],[1514368800,4,867,52,2.8,4.6,601.099,14.379,1221.222,1175.408],[1514372400,4,899,58,3.9,4.6,688.042,17.635,1389.698,1337.594],[1514376000,4,918,54,4.4,5.2,705.457,17.613,1426.324,1372.798],[1514379600,4,886,55,5,5.2,642.367,17.037,1295.116,1246.595],[1514383200,4,830,46,5.6,5.2,511.059,15.028,1020.479,981.609],[1514386800,4,673,39,5.6,4.6,319.635,11.619,614.71,588.054],[1514390400,4,172,27,3.9,3.6,76.538,4.71,142.827,127.306],[1514394000,4,0,0,2.2,4.1,0,2.2,0,0],[1514397600,4,0,0,0.6,3.1,0,0.6,0,0],[1514401200,4,0,0,-0.6,3.1,0,-0.6,0,0],[1514404800,4,0,0,-1.7,2.1,0,-1.7,0,0],[1514408400,4,0,0,-2.2,1.5,0,-2.2,0,0],[1514412000,4,0,0,-3.3,0,0,-3.3,0,0],[1514415600,4,0,0,-3.3,0,0,-3.3,0,0],[1514419200,4,0,0,-4.4,0,0,-4.4,0,0],[1514422800,4,0,0,-4.4,2.6,0,-4.4,0,0],[1514426400,4,0,0,-4.4,2.6,0,-4.4,0,0],[1514430000,4,0,0,-5,2.1,0,-5,0,0],[1514433600,4,0,0,-6.1,2.1,0,-6.1,0,0],[1514437200,4,0,0,-5.6,3.6,0,-5.6,0,0],[1514440800,4,0,0,-6.1,3.6,0,-6.1,0,0],[1514444400,4,38,18,-5.6,4.1,26.896,-6.551,55.617,41.793],[1514448000,4,322,65,-3.9,5.2,189.557,-1.665,386.794,365.928],[1514451600,4,584,79,-2.2,6.2,391.708,3.526,818.285,785.806],[1514455200,4,370,160,-1.7,5.7,410.953,4.976,877.297,843.016],[1514458800,4,612,140,-0.6,5.2,590.969,9.976,1238.571,1192.128],[1514462400,4,600,124,-0.6,4.1,572.075,11.577,1191.248,1146.508],[1514466000,4,477,118,0.6,3.6,452.262,10.805,941.593,905.288],[1514469600,4,650,76,1.1,4.1,453.697,10.393,929.055,893.15],[1514473200,4,517,47,1.7,3.6,269.287,7.363,532.252,507.78],[1514476800,4,312,20,0,3.1,99.75,1.32,176.98,160.764],[1514480400,4,0,0,-1.7,3.1,0,-1.7,0,0],[1514484000,4,0,0,-2.2,2.6,0,-2.2,0,0],[1514487600,4,0,0,-3.3,1.5,0,-3.3,0,0],[1514491200,4,0,0,-3.9,2.6,0,-3.9,0,0],[1514494800,4,0,0,-4.4,2.6,0,-4.4,0,0],[1514498400,4,0,0,-4.4,3.1,0,-4.4,0,0],[1514502000,4,0,0,-4.4,3.1,0,-4.4,0,0],[1514505600,4,0,0,-5,4.1,0,-5,0,0],[1514509200,4,0,0,-5,4.1,0,-5,0,0],[1514512800,4,0,0,-5.6,3.1,0,-5.6,0,0],[1514516400,4,0,0,-6.1,2.6,0,-6.1,0,0],[1514520000,4,0,0,-6.7,2.1,0,-6.7,0,0],[1514523600,4,0,0,-7.2,2.6,0,-7.2,0,0],[1514527200,4,0,0,-7.2,2.6,0,-7.2,0,0],[1514530800,4,127,14,-6.7,2.1,43.691,-7.352,81.205,66.895],[1514534400,4,525,35,-4.4,4.1,223.149,-1.064,438.157,416.053],[1514538000,4,784,36,-2.2,4.6,436.405,5.673,895.334,860.491],[1514541600,4,873,51,-1.1,3.1,603.285,12.997,1233.105,1186.861],[1514545200,4,848,49,0.6,2.6,642.787,16.973,1302.103,1253.321],[1514548800,4,932,50,2.2,2.6,711.833,20.34,1421.47,1368.133],[1514552400,4,918,47,2.8,2.6,655.725,19.731,1305.913,1256.99],[1514556000,4,858,40,4.4,1.5,521.794,20.371,1017.04,978.284],[1514559600,4,736,30,3.9,2.6,334.077,12.177,638.945,611.628],[1514563200,4,399,16,2.8,2.1,113.82,5.039,193.093,176.543],[1514566800,4,0,0,-1.1,2.1,0,-1.1,0,0],[1514570400,4,0,0,-1.7,1.5,0,-1.7,0,0],[1514574000,4,0,0,-2.8,0,0,-2.8,0,0],[1514577600,4,0,0,-2.2,0,0,-2.2,0,0],[1514581200,4,0,0,-3.3,0,0,-3.3,0,0],[1514584800,4,0,0,-3.3,0,0,-3.3,0,0],[1514588400,4,0,0,-4.4,0,0,-4.4,0,0],[1514592000,4,0,0,-5,0,0,-5,0,0],[1514595600,4,0,0,-3.9,0,0,-3.9,0,0],[1514599200,4,0,0,-3.9,0,0,-3.9,0,0],[1514602800,4,0,0,-4.4,0,0,-4.4,0,0],[1514606400,4,0,0,-4.4,1.5,0,-4.4,0,0],[1514610000,4,0,0,-5,0,0,-5,0,0],[1514613600,4,0,0,-5.6,0,0,-5.6,0,0],[1514617200,4,32,19,-4.4,0,26.921,-5.719,56.342,42.504],[1514620800,4,26,66,-2.2,0,79.117,-2.747,174.037,157.881],[1514624400,4,12,96,-0.6,1.5,101.34,0.438,222.864,205.687],[1514628000,4,80,147,1.7,1.5,202.557,5.976,433.882,411.883],[1514631600,4,81,202,2.8,2.1,269.467,8.875,571.694,546.189],[1514635200,4,116,256,3.3,2.6,358.076,11.435,751.195,720.703],[1514638800,4,69,158,5,2.6,209.606,9.58,442.836,420.618],[1514642400,4,2,154,5,1.5,158.608,8.419,338.086,318.357],[1514646000,4,25,102,5,1.5,120.149,7.014,255.617,237.734],[1514649600,4,6,26,5,0,27.236,3.704,58.463,44.585],[1514653200,4,0,0,4.4,0,0,4.4,0,0]]&sentat=1471006684&apikey=blahblahblah

ok

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 2 100 2 0 0 5 0 --:--:-- --:--:-- --:--:-- 5

http://localhost/input/bulk.json?data=[[1514656800,4,0,0,4.4,0,0,4.4,0,0],[1514660400,4,0,0,3.9,1.5,0,3.9,0,0],[1514664000,4,0,0,3.9,0,0,3.9,0,0],[1514667600,4,0,0,4.4,0,0,4.4,0,0],[1514671200,4,0,0,4.4,0,0,4.4,0,0],[1514674800,4,0,0,3.9,0,0,3.9,0,0],[1514678400,4,0,0,3.3,2.1,0,3.3,0,0],[1514682000,4,0,0,3.3,0,0,3.3,0,0],[1514685600,4,0,0,3.3,0,0,3.3,0,0],[1514689200,4,0,0,3.3,0,0,3.3,0,0],[1514692800,4,0,0,3.3,0,0,3.3,0,0],[1514696400,4,0,0,3.3,1.5,0,3.3,0,0],[1514700000,4,0,0,3.3,2.1,0,3.3,0,0],[1514703600,4,1,6,3.9,2.1,5.968,1.976,12.943,0],[1514707200,4,3,56,4.4,1.5,57.117,3.752,123.938,108.794],[1514710800,4,4,110,5.6,2.1,112.542,6.803,241.412,223.837],[1514714400,4,6,174,5.6,1.5,180.822,9.26,383.932,363.134],[1514718000,4,1,243,6.7,2.1,252.353,12.208,529.144,504.752],[1514721600,4,1,230,7.8,0,237.071,17.525,485.382,462.106],[1514725200,4,1,216,8.3,1.5,223.369,13.784,465.09,442.322],[1514728800,4,40,164,10.6,2.1,197.546,14.762,407.784,386.417],[1514732400,4,69,88,10.6,1.5,120.818,12.844,246.947,229.253],[1514736000,4,3,11,10,0,11.286,8.016,23.711,10.48],[1514739600,4,0,0,8.9,0,0,8.9,0,0],[1514743200,4,0,0,8.3,0,0,8.3,0,0],[1514746800,4,0,0,7.8,0,0,7.8,0,0],[1514750400,4,0,0,6.7,1.5,0,6.7,0,0],[1514754000,4,0,0,6.7,0,0,6.7,0,0],[1514757600,4,0,0,11.1,4.1,0,11.1,0,0],[1514761200,4,0,0,11.1,3.1,0,11.1,0,0]]&sentat=1471006690&apikey=blahblahblah

ok

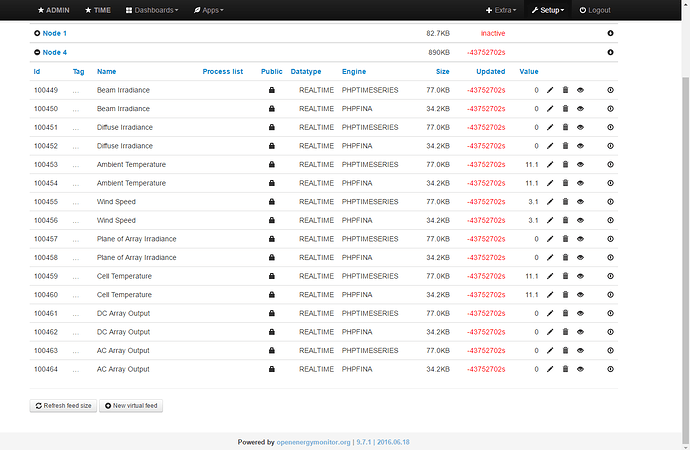

Here is a screenshot of the feeds page showing the data sizes which appear correct (8760x9bytes=78840 /1024=76.99kB & 8760x4bytes=35040 /1024=32.21kB)

If you have a file for 2016 start with using the formula in the speadsheet I posted to get a pure csv file (watch out for windows/unix line-endings), or give me the file and I will convert it for you.

I’ve attached the script which you will need to edit for your own apikey etc but running the script is the final step, the feeds and the csv all need to be setup first. There is little or no error checking (and I should add some comments too) so you must fully prepare before running it.

upload_csv.sh.txt (969 Bytes)

fancy giving it a go?