Europe is supposed to have a unified grid system the agreement is for 230V not 240V, however the British grid system mainly runs at 240 because of the cost of replacing or down tapping the whole countries transformers. I live in a rural area with lots of solar and wind farms. When we have strong winds we had peaks of up to 279V! and the average voltage was 245V, and on many occasions over the legal specified upper limit of 253V. I complained to Western Power who fitted a monitor for a week and agreed the voltage was too high, we and several neighbours on the same transformer have been down tapped and now average 233V. What the company did not do is investigate why the local wind farms were using what is obviously inadequate equipment regarding voltage regulation.

If the grid companies were to comply and work towards a stable 230V systems it would cut the nations bills by around 8-10%, and ensure that appliances lasted longer and would operate with greater safety. The saving on bills would of course be short lived as the supply companies (French, German & Spanish owned) would very quickly hike up prices to cover their loss of revenue.

What’s needed is a national campaign to get our system running at a stable 230V. It can be done there are self regulating transformers available but the government seem hell bent on forcing into having smart meters, an evil that will never enter my home.

It might be worth mentioning - it’s fooled some users from continental Europe - that the UK grid frequency is independent of the rest of Europe, courtesy of course of the d.c. links that connect the two systems.

I’m curious as to how you get an 8-10% saving by reducing the voltage?

The simple answer (possibly wrong) is it assumes energy use is directly related to voltage squared. That’s a dangerous assumption as it ignores both human behaviour and loads like a battery charger or a thermostatically controlled immersion heater, where especially in the latter case, the fallacy is obvious - it just takes longer to heat the water, but the energy input remains the same, as it’s only dependent on the mass of the water and temperature rise (neglecting a second-order effect that the setpoint is reached later so the duration over which more energy has to be input to offset cooling is reduced.)

In some cases, reduced voltage may actually increase energy use due to the apparatus being less efficient.

There is an energy management consultant who has researched this, and the link shows that the results are at best variable (http://vesma.com/enmanreg/voltage_reduction.htm).

As Robert correctly stated, resistive loads will show no savings it still takes the same amount of energy to raise say the temperature of water in a kettle it just takes longer, many laws can be broken but not the laws of physics . voltage optimisers have been shown to be beneficial with inductive loads, motors etc. and non output reliant equipment T.V.s computers and so on. As far as energy suppliers support for reducing voltage, as they see it can reduce peak demand, like the nation putting on their collective kettles during the ad break for “I’m strictly a big brother baking off in the jungle”, it reduces peak current demand and spreads it over a greater time so less stress on the grid. There was a suggestion that Sky TV should end the practice of aligning the timing of their advert breaks but the nasty little Australian refused as he said people would channel hop to avoid the adverts thereby losing him and Jerry ad revenue.

[Moderator’s note (RW): “I’m strictly a big brother baking off in the jungle” is made-up name for a TV show taken from the names of several popular programmes.]

Our Prime Minister declared recently that if the laws of mathematics conflict with the laws of Australia, then the laws of Australia take precedence.

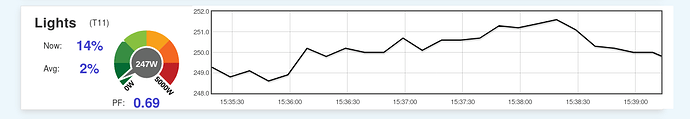

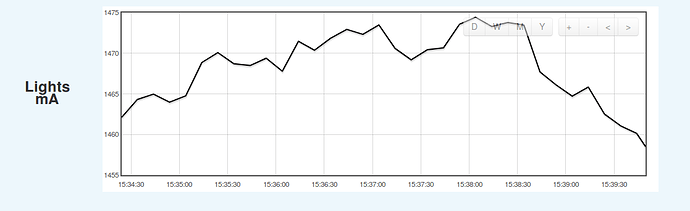

Most switch mode power supplies have a very flat Efficiency Vs Supply Voltage graph so I doubt you’ll get much saving on your TVs and computers. I just did a quick test with my precision monitor and about 250W worth of lighting (combination of LEDs and CFLs, but no incandescent), which all have small SMPSs in them. A 3% drop in supply voltage actually made the power go up slightly, the current also went up to compensate somewhat for the lower voltage, but not by the same proportion, so the power factor of the lights improved slightly, but the one thing it didn’t do is lower my energy bill.

A similar test with the pool pump was slightly more rewarding. A 3% supply voltage drop resulted in a 0.9% real power drop (and an impressive 16% reactive power drop).

I reckon you’re going to struggle to get an 8-10% energy saving, unless you have a lot of incandescent bulbs.

or unless the excess energy was going to waste anyway. Reading between the lines of the Vesma.com link, that seems to be the most probable explanation.

Interesting article-

https://electricalreview.co.uk/features/8296-the-best-kept-voltage-management-secret

And another that speaks of Japan’s development and use of amorphous core transformers.

Hi,

I’m rather late in this discussion, but I have extensive experience of voltage effects on all types of loads in all sectors. The truth is more complex than you may think, as real power savings result through proper regulation of voltage for directly connected motor loads (60% + of industrial load is motors), but it is a diminishing benefit as more and more loads are now controlled (frequency and voltage) and can be regarded as ‘electronic’ so that voltage makes very little difference. This is true domestically where voltage used to have a significant impact on fridges, freezers, boilers (CH pumps) and fans, but newer models show little or no sensitivity to voltage. Likewise for lighting, particularly with LED lighting becoming the norm. Now a little out-of-date, but see my website page on this subject https://www.redford-tech.co.uk/Voltage.html .

The savings of 8~10% (sometimes a lot more claimed) are mainly associated with loads where reduced voltage gives reduced service (less light for example), but this is not necessarily a ‘bad’ thing when original designs were based on 230/400V supplies.

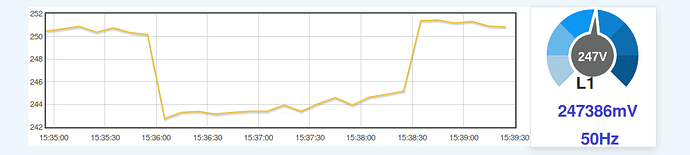

Although the impact of high voltage (I get 248V average in a rural location!) is less important with ‘electronic’ loads, there is still a worthwhile saving to be had from the Distribution network and from ‘legacy’ loads. Voltage optimisation often plays an important role in Distribution Company ‘network innovation’ projects and its effect on demand are well understood by Electricity Companies. Keep complaining, you will save some kWh’s and you will prevent PV inverters tripping on G83 settings!