@glyn.hudson - This is interesting, as is emoncms-docker, Can you do a “pro’s and con’s” of each to explain and compare the 2 approaches?

Do you plan to continue to use both?

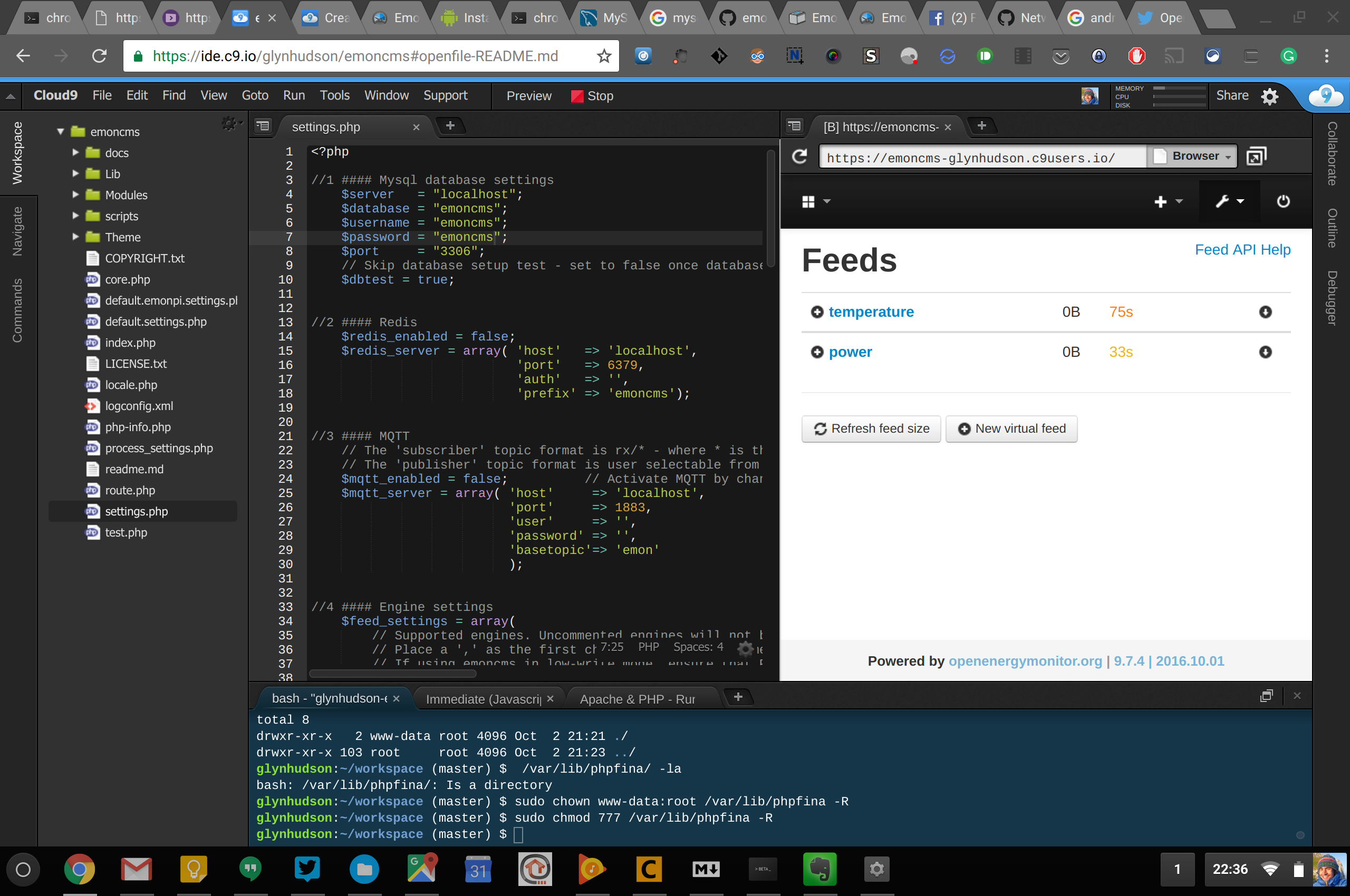

Regarding the dashboards and dummy historical data (“and also a service to hit the API hard with a large volume of incoming data” as mentioned elsewhere), the dashboards are easily posted via sql if you know the feedid’s in advance. If you use a specific set/order of device api’s with pre-established inputlists, processlists and feedlists on a virgin install you can predict all the feedids as they start at 0 (in fact you can do it at anytime by knowing the current auto-increment value for each table in question).

When using the device module api’s (or even a feed api) the feeds are not actually created, they only have an entry in the feeds table and when the first data is written the feed is created then with the timestamp of that data frame being the feeds starting point.

So that means historic data could be then be easily added by using a script to create input data from something along the lines of script_started_at_unixtime + input_frame_timeoffset, for example 1476008343 (current time) + -63072000 (2yrs in secs) = 1412936343 so the first posted data would be GMT: Fri, 10 Oct 2014 10:19:03 GMT.

If you used emonhub to post the data from a csv text file, it could be managed/edited in excel and emonhub can post it as fast as you like (within reason and hardware/network capability), it is hardcoded to throttle to 250 frames per bulk request, but it could post up to the max size of the buffer (I run buffers of 70,000 frames) as fast as every 1 second (assuming the previous request has completed), if emoncms is still working after a battering like that you can consider the input stages thoroughly tested and you should have a couple of years or so data.

The benefit of doing it this way is you could even use a small set of data and repeat it as many times as you want, even adding an element of random adjustment so a single day of emonTx data could be duplicated say10 times with a random +/- 5% applied to each value and a different node ID, and looped 1825 times incrementing a base starting timestamp by 86400 each loop. This would give you 5years worth of data from 10 emonTx’s (that’s 157,680,000 frames @ 10s interval) at a posting rate set (and editable on the fly) in emonhub.conf.

That same script (or EmonHubTestCMSInterfacer ?) could then continue to post to those same inputs indefinately, the only downside to such a small template data is there would be no seasonal changes unless you cycled a full years worth of data or wrote in some clever seasonal adjustments based on the calculated timestamp, (perhaps a bit OTT for a test server but possible)

By using the same frame multiple times, means you can also reuse the same single device template and the same dashboards too by calcuating/substituting the feedids before posting. I already use the device templates and have a system to automate adding cross-device totaling etc