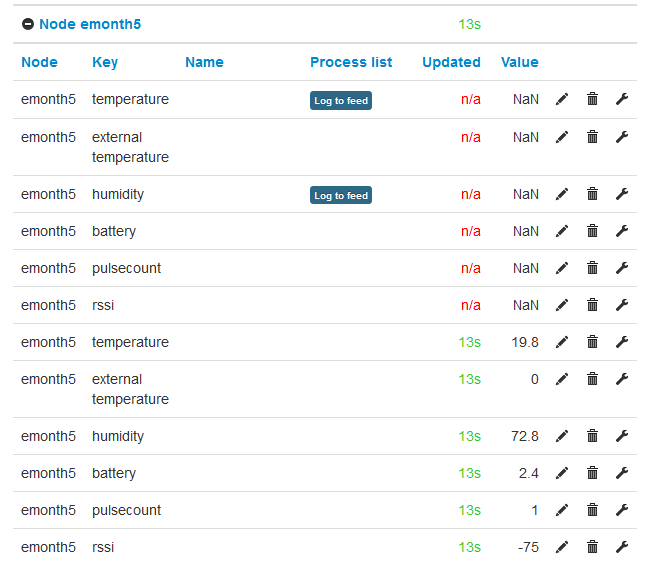

Hi, does this look familiar to someone? Something I did or forgot to do?

Can you delete the ‘dead’ inputs, and add your feed processes to the ‘live’ inputs?

Do the ‘dead’ inputs re-appear?

Paul

Hmm, it worked. It’s not very satisfying, though, not to know if or when it will happen again.

Very familiar, easiest way forward is to delete the new inputs and the old ones will spring to life again

In my experience it is more likely to happen after several reboots in succession. See thread below for more details

That could well be, I had a few involuntary reboots due to power loss the last time… thanks!

Zombie thread alert!

Hello chaps did anything become of this issue?

I’ve just had it again, which may well have been a reboot, I’m not 100% sure as it took me over two weeks to notice the lack of data.

Kevin’s solution of deleting the phantom inputs did work, but took a while as I have dozens of the things.

Is it now just part of the reboot procedure to check the inputs haven’t duplicated?

I do not think it has been specifically addressed, but there do seem to be less cases reported these days. I think there are less issues to trigger this problem rather than emoncms being best placed to avoid it.

There are some changes in the pipeline for “indexed inputs” which may (or may not) directly impact this issue, maybe @TrystanLea can confirm whether that might be the case?

When this issue occurs a simple way to recover is to use the http(s):/server.com/emoncms/input/clean api call. This api call will delete all inputs that do not have any processes attached, so I tend to add a redundant process to any valid inputs that I do not actually use, just to ensure they do not get deleted when I use the input/clean api.

Since this api can be called using a readwrite apikey, it is possible to setup a watchdog script or nodered flow to fire of a input/clean api call if an input stops updating. I personally do not like “fixes” like this but if you are suffering frequently and a fix isn’t in the pipeline it might be worth considering.

Once the newly created set of duplicate inputs are deleted, the original inputs automatically start updating again.

I believe this is because the way the inputs table is queried in the natural order from low inputid’s thru the higher inputid’s so the last “node 10, input 1” it encounters is the newest. I wonder if this could be fixed by simply reversing that search order (if that’s even possible) so that it always finds the original after any duplicates, it wouldn’t necessarily stop the inputs being duplicated, but the very next set of data would update the original inputs rather than any duplicates so only a single update is lost. Just a thought! @TrystanLea?

[edit]

Issue raised so it hopefully doesn’t drop off the radar and get resolved down the line.

It’s happened to me twice in the last few weeks after rebooting

Hello @Bra1n could you give us a bit more info on your system. Could you copy here the server information section on the emoncms admin page?

I use MySQL for feed storage as I can then easily do other enquiries on the database (e.g report inactive feeds)

Server Information

Services

emonhub : Active Running

emoncms_mqtt : Active Running

feedwriter : Inactive DeadService is not runningloading…

service-runner : Active Running

emonPiLCD : Inactive Dead

redis-server : Active Running

mosquitto : Active Running

demandshaper : Active Running

Emoncms

Version : 10.2.7

Modules : Administration | App v2.2.5 | Backup v2.2.6 | EmonHub Config v2.0.5 | Dashboard v2.0.9 | DemandShaper v2.1.2 | Device v2.0.7 | EventProcesses | Feed | Graph v2.0.9 | Input | Postprocess v2.1.4 | CoreProcess | Schedule | Network Setup v1.0.0 | sync | Time | User | Visualisation | WiFi v2.0.3

Git :

URL : GitHub - emoncms/emoncms: Web-app for processing, logging and visualising energy, temperature and other environmental data

Branch : * stable

Describe : 10.2.7

Server

OS : Linux 5.4.83-v7l+

Host : raspberrypi2 | 192.168.1.61 | (192.168.1.61)

Date : 2021-01-20 12:06:53 UTC

Uptime : 12:06:53 up 2 days, 22:24, 0 users, load average: 0.71, 0.47, 0.39

Memory

RAM : Used: 3.85%

Total : 7.74 GB

Used : 305.01 MB

Free : 7.44 GB

Swap : Used: 0.00%

Total : 100 MB

Used : 0 B

Free : 100 MB

Write Load Period

Disk

/ : Used: 2.85%

Total : 109.8 GB

Used : 3.12 GB

Free : 102.19 GB

Write Load : n/a

/boot : Used: 17.93%

Total : 252.05 MB

Used : 45.19 MB

Free : 206.86 MB

Write Load : n/a

/var/log : Used: 7.53%

Total : 50 MB

Used : 3.77 MB

Free : 46.23 MB

Write Load : n/a

HTTP

Server : Apache/2.4.38 (Raspbian) HTTP/1.1 CGI/1.1 80

MySQL

Version : 5.5.5-10.3.27-MariaDB-0+deb10u1

Host : localhost (127.0.0.1)

Date : 2021-01-20 12:06:53 (UTC 00:00)

Stats : Uptime: 253485 Threads: 22 Questions: 3792173 Slow queries: 0 Opens: 144 Flush tables: 1 Open tables: 136 Queries per second avg: 14.960

Redis

Version :

Redis Server : 5.0.3

PHP Redis : 5.3.2

Host : localhost:6379

Size : 342 keys (814.45K)

Uptime : 2 days

MQTT Server

Version : Mosquitto 1.6.12

Host : localhost:1883 (127.0.0.1)

PHP

Version : 7.3.19-1~deb10u1 (Zend Version 3.3.19)

Modules : apache2handlerbz2 calendar Core ctype curl date dom v20031129exif fileinfo filter ftp gd gettext hash iconv json v1.7.0libxml mbstring mosquitto v0.4.0mysqli mysqlnd vmysqlnd 5.0.12-dev - 20150407 - $Id: 7cc7cc96e675f6d72e5cf0f267f48e167c2abb23 $openssl pcre PDO pdo_mysql Phar posix readline redis v5.3.2Reflection session shmop SimpleXML sockets sodium SPL standard sysvmsg sysvsem sysvshm tokenizer wddx xml xmlreader xmlwriter xsl Zend OPcache zip v1.15.4zlib

Pi

Model : Raspberry Pi Model N/A Rev - ()

Serial num. : 1000000065D096CE

CPU Temperature : 42.36°C

GPU Temperature : 43.3°C

emonpiRelease : emonSD-02Oct19

File-system : read-only

Client Information

HTTP

Browser : Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/87.0.4280.141 Safari/537.36

Language : en-GB,en-US;q=0.9,en;q=0.8

Window

Size : 1851 x 1058

Screen

Resolution : 2195 x 1235

Thank you very much for the information provided. I have been struggling with this duplication of feeds for two months when I came across this discussion. Every time I have a power failure I get duplicates. I am monitoring 150 Pi’s and 15000 feeds. By using “http://10.10.2.100/emoncms/input/clean” it has saved me hours of work and reduced the amount of data I would have lost.

I now reboot my Emoncms Pi every night (due to a backup regime that doesn’t leave Emoncms in a good state after stopping and starting services) so I’m getting frequent duplications. I run input/clean when node-red detects feeds that haven’t updated for more than one hour.