@dc1970, did you try and run this? An exception in the Python will be seen here.

Hi,

I did purchase the power adapter with the emonPI and have been running the journalctl command mentioned above and I have not seen any issues reported.

One observation I can add to this is that the comms between the emonPI and the emonTH seem a lot more reliable when I have a connected SSH session. I don’t know the intricacies of the comms between the units at this point but it is starting to look more and more like its possibly a power saving feature that goes in to sleep mode or a comms failure that exceeds its max retries and then is silently failing.

Can the logging output be changed through the config or would I need to modify the code which I believe is available on github. If I modify the code can a custom version of the code be loaded on to the emonPI and is there a guide to do it. I haven’t done any PI software development but I am a software developer so would be capable of making changes with a bit of time/effort.

Thanks

David

But unless you were actually watching it, you don’t know when the last RFM message was returned in relation to the log message. You could try this (I’m running it to see if it works)

tail -f /var/log/emonhub/emonhub.log | perl -pe 's/^/$_=qx(date +%T_); chomp; $_/e' | grep "NEW FRAME : OK 19"

I do appreciate what you are seeing, but really don’t think the logging or the SSH session being closed has anything to do with it as there are 100s of these devices out in the wild and you seem to be the only one with an issue.

If you just restart emonhub (i.e. from the Web UI) this restarts the data from the emonTH - correct?

Have you run an update?

If so then you could try reflashing the SD Card with a fresh image on the off chance there is something not quite right.

Otherwise, I think you need to email the shop and ask them. You could perhaps try a second enonTH, if both do the same, I suggest it is probably a hardware fault on the emonPi. When you email, reference this discussion please.

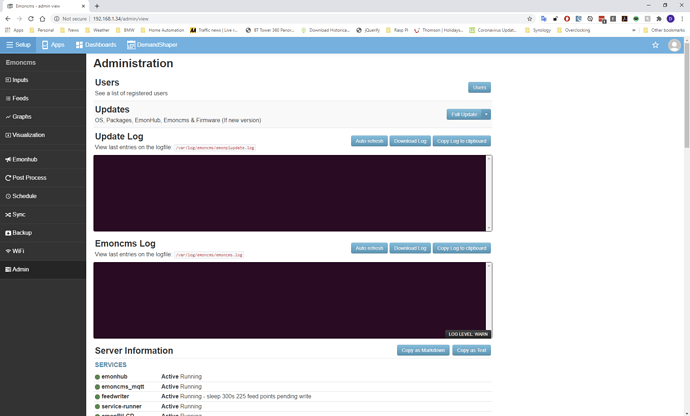

Here is something I hadn’t noticed before, this is the top of the admin page and all the logs are empty.

and here is the server info at the same time

‘’’

Server Information

Server Information

Services

- emonhub :- Active Running

- emoncms_mqtt :- Active Running

- feedwriter :- Active Running - sleep 300s 225 feed points pending write

- service-runner :- Active Running

- emonPiLCD :- Active Running

- redis-server :- Active Running

- mosquitto :- Active Running

- demandshaper :- Active Running

Emoncms

- Version :- low-write 10.2.3

- Modules :- Administration | App v2.1.6 | Backup v2.2.3 | EmonHub Config v2.0.5 | Dashboard v2.0.7 | DemandShaper v1.2.5 | Device v2.0.5 | EventProcesses | Feed | Graph v2.0.9 | Input | Postprocess v2.1.4 | CoreProcess | Schedule | Network Setup v1.0.0 | sync | Time | User | Visualisation | WiFi v2.0.2

-

Git :-

- URL :- GitHub - emoncms/emoncms: Web-app for processing, logging and visualising energy, temperature and other environmental data

- Branch :- * stable

- Describe :- 10.2.3

Server

- OS :- Linux 4.19.75-v7+

- Host :- emonpi | emonpi | (192.168.1.34)

- Date :- 2020-07-27 09:29:43 BST

- Uptime :- 09:29:43 up 5 days, 13:23, 0 users, load average: 0.13, 0.11, 0.09

Memory

-

RAM :- Used: 20.74%

- Total :- 975.62 MB

- Used :- 202.31 MB

- Free :- 773.31 MB

-

Swap :- Used: 0.00%

- Total :- 100 MB

- Used :- 0 B

-

Free :- 100 MB

Write Load Period

Disk

-

/ :- Used: 51.10%

- Total :- 3.92 GB

- Used :- 2.01 GB

- Free :- 1.73 GB

- Write Load :- 757.62 B/s (5 days 12 hours 52 mins)

-

/boot :- Used: 20.55%

- Total :- 252.05 MB

- Used :- 51.79 MB

- Free :- 200.26 MB

- Write Load :- 0 B/s (5 days 12 hours 52 mins)

-

/var/opt/emoncms :- Used: 0.05%

- Total :- 9.98 GB

- Used :- 5.24 MB

- Free :- 9.47 GB

- Write Load :- 58.38 B/s (5 days 12 hours 52 mins)

-

/var/log :- Used: 2.18%

- Total :- 50 MB

- Used :- 1.09 MB

- Free :- 48.91 MB

- Write Load :- n/a

HTTP

- Server :- Apache/2.4.38 (Raspbian) HTTP/1.1 CGI/1.1 80

MySQL

- Version :- 5.5.5-10.3.17-MariaDB-0+deb10u1

- Host :- localhost:6379 (127.0.0.1)

- Date :- 2020-07-27 09:29:42 (UTC 01:00)

- Stats :- Uptime: 480716 Threads: 12 Questions: 369304 Slow queries: 0 Opens: 51 Flush tables: 1 Open tables: 42 Queries per second avg: 0.768

Redis

-

Version :-

- Redis Server :- 5.0.3

- PHP Redis :- 5.0.2

- Host :- localhost:6379

- Size :- 125 keys (869.79K)

- Uptime :- 5 days

MQTT Server

- Version :- Mosquitto 1.5.7

- Host :- localhost:1883 (127.0.0.1)

PHP

- Version :- 7.3.9-1~deb10u1 (Zend Version 3.3.9)

- Modules :- apache2handlercalendar Core ctype curl date dom v20031129exif fileinfo filter ftp gd gettext hash iconv json v1.7.0libxml mbstring mosquitto v0.4.0mysqli mysqlnd vmysqlnd 5.0.12-dev - 20150407 - $Id: 7cc7cc96e675f6d72e5cf0f267f48e167c2abb23 $openssl pcre PDO pdo_mysql Phar posix readline redis v5.0.2Reflection session shmop SimpleXML sockets sodium SPL standard sysvmsg sysvsem sysvshm tokenizer wddx xml xmlreader xmlwriter xsl Zend OPcache zlib

Pi

-

Model :- Raspberry Pi 3 Model B Rev 1.2 - 1GB (Embest)

-

Serial num. :- 539EE22D

-

CPU Temperature :- 44.01°C

-

GPU Temperature :- 44.5°C

-

emonpiRelease :- emonSD-17Oct19

-

File-system :- read-write

Have you tried running a ‘full update’

Can you check permissions on the folders /var/log and /var/log/emonhub/

Hi,

Yes the feed restarts if I restart emonHub through the web UI.

Yes I have run a full update on the day I initially setup the emonPI and from memory nothing was updated as it was all up to date. I retried it again this morning after posting my previous post and again there were no updates reported on the log.

I have been in contact with the store and they seem to think its an issue with the emonPI and are sending me out a replacement.

Thank you both for you help and time on this issue and hopefully the new unit will rectify the problem.

Another observation I have made is that the duration between failures seems to be increasing, when I first noticed the problem it was anywhere between 3 to 6 hours and it seems to have slowly increased over the last week to where it is now running at least 12 hours between failures.