I was looking at the Dropbox Backup threads [1] [2] in the old forum but I don’t want to use Dropbox. So I was thinking about using rclone and Amazon iCloud Drive or Amazon S3. I am curious if anyone had used rclone and had any comments about it. I can find lots of “hey you should use this” type references to rclone but really no reviews or good/bad comments. Thoughts?

I haven’t looked at rclone in detail, but I suspect the biggest problem will be archive management, especially the weeding of older archives.

If run in sync mode, you could sync local corrupt data, which would not provide you a rollback.

That’s why I developed the dropbox archive, because it can be rolled back several days to ensure that a un-corrupted archive is available.

What’s the issue with Dropbox, it’s free, and works?

Paul

right now I use rsync with archive and link-dest on my local network. That allows me to rollback for many days (now back to the beginning of the year). It seems to work A-OK but I’d rather have something external. I had not thought about grabbing a corrupt file or data!

The only issue with Dropbox is it is always filling up. I share too many folders with family and someone adds an album of pics and boom - no more room.

Why not register another Dropbox account using a pseudo email address and use it solely for backups. Using the dropbox archive script is install & forget because it automatically weedes older archives,and stores your newer archives which can be retrieved if you have a problem.

Up to you!

Paul

Hmm - that is a good thought. When you run the Dropbox Backup script and it saves 7 days of data, how much of your Dropbox space is used?

That depends how much data you have.

The archives are compressed before uploading, but if you refresh the feed size from your feeds page and note how much data you have, that will give you a clue. My total feed size is 72Mb, yet when compressed it shrinks it to just 15Mb, and that also includes the MYSQL dump and node-red backup too.

If you then store 7 day’s worth of archives (you can store any number you wish) it’s 8 x 15Mb = 120Mb ( It uploads the 8th archive before deleting the expired archive)

If you opted for 3 days of archives, that would be 60Mb.

Paul

Paul - I was going to give the Dropbox Archive script a try. This is on an emonPi and I did see the “not tested” warning. I think I have enough data space. Any other watch outs?

pi@emonpi:~ $ df -h

Filesystem Size Used Avail Use% Mounted on

/dev/root 3.4G 2.3G 964M 71% /

/dev/mmcblk0p1 60M 20M 41M 34% /boot

/dev/mmcblk0p3 3.8G 582M 3.0G 16% /home/pi/dat

A few things to watch for;

-

Needs to be installed in the writeable partition - /home/pi/data

-

The first time that you run the script, you need root to be in rw mode as the setup process writes a small config file to system root

-

Make sure that your paths are correct in config.php - the emoncms data paths (would yours be /home/pi/data ?) and I’m sure that your .node-red directory has also been moved from the default location (you could leave node-red backup config setting as ‘No’ initially).

-

Apart from the very small config file, everything is contained within the dropbox-archive directory, so if there are problems, you can easily just delete the whole directory and start again.

Paul

Paul - I cannot get the dropbox archive script to work. I keep getting this error:

...

PHPTIMESERIES: 91

--downloaded: 459 bytes

PHPTIMESERIES: 92

--downloaded: 206730 bytes

PHPFINA: 95

--downloaded: 65124 bytes

Dumping MYSQL data

Backing up node-red data

An error has occured, details:tar-based phar "/home/pi/data/myApps/dropbox-archive/backups/archive.tar" cannot be created, header for file "nodered/.flows_emonpi_cred.json.backup" could not be writtenChecking for expired archives...

find: `*.gz': No such file or directory

Uploading new archive to Dropbox, this may take a while...

Is the backup directory suppose to be root:root for ownership?

drwxr-xr-x 2 root root 1024 May 24 21:43 backups

Here is the config file:

$emoncms_server = "http://192.168.40.230/emoncms"; //emoncms local IP address

$emoncms_apikey = "myAPIkey"; // Needs to be emoncms write API key

$dbuser = "emoncms"; // Database user name - default is emoncms

$dbpass = "emonpiemoncmsmysql2016"; // Database user password

$dbname = "emoncms"; // Database name - default is emoncms

$datadir = "/home/pi/data/mysql"; // path to emoncms data directories

$store = "7"; // Number of days of archives to store

// Create archive backups of node-red flows, configs and credentials

$nodered = "Y"; // options Y or N

$NRdir = "/home/pi/.node-red"; //Node-red backup dir, default is /home/pi/.node-red

// but will be different for an emonpi

EDIT: I can get the dropbox-uploader script to run fine. I just picked a random directory and uploaded it to dropbox. All files and directories uploaded as expected.

Presumably you’re running the script initially by $sudo php backup.php from within the dropbox-archive directory.

Is $datadir in your config file correctly configured for your emoncms data directories (phpfina, phptimeseries etc). My data files are stored /var/lib/phpfina etc so my config file is $datadir = "/var/lib";

Can you run the script if $nodered in config is set to N

The temp_data directory within dropbox-archive is normally deleted by the script once it’s contents have been archived, if it’s still present (because the script didn’t complete) - delete it before re running the script (I must add a check in the script to do this automatically!)

I can’t understand why it’s struggling to copy the .flows_emonpi_cred.json.backup file, as my installation has a .flows_raspberrypi_cred.json.backup file and works fine.

If it works OK with the node-red backup disabled, that would help me isolate the issue.

Paul

Paul - I am using the sudo php backup.php. The $datadir was wrong and I updated (see config below). And I set the $nodered to “N”. But I am still getting errors.

The temp_data directory does get deleted at the end so I don’t need to manually delete.

Here is the current config:

$emoncms_server = "http://192.168.40.230/emoncms"; //emoncms local IP address

$emoncms_apikey = "myAPIkey"; // Needs to be emoncms write API key

$dbuser = "emoncms"; // Database user name - default is emoncms

$dbpass = "emonpiemoncmsmysql2016"; // Database user password

$dbname = "emoncms"; // Database name - default is emoncms

$datadir = "/home/pi/data"; // path to emoncms data directories

$store = "7"; // Number of days of archives to store

// Create archive backups of node-red flows, configs and credentials

$nodered = "N"; // options Y or N

$NRdir = "/home/pi/.node-red"; //Node-red backup dir, default is /home/pi/.node-red

Here are the errors:

PHPTIMESERIES: 91

--downloaded: 477 bytes

PHPTIMESERIES: 92

--downloaded: 216081 bytes

PHPFINA: 95

--downloaded: 73440 bytes

Dumping MYSQL data

An error has occured, details:tar-based phar "/home/pi/data/myApps/dropbox-archive/backups/archive.tar" cannot be created, header for file "mysql/emoncms_02-06-2016_091928.sql" could not be writtenChecking for expired archives...

find: `*.gz': No such file or directory

Uploading new archive to Dropbox, this may take a while...

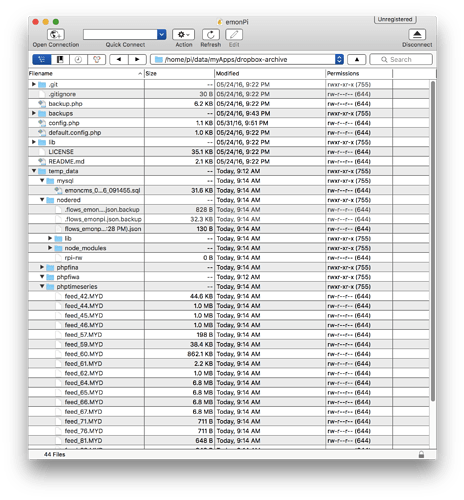

FYI - I can see the temp_data directory populate:

Everything appears to be working OK for you until this section of code.

Up until L150, the script has successfully copied your feed data directories, node-red configs & MYSQL dump to a temporary staging directory - ‘temp_data’

The next stage in the script is to create a tar file of the entire ‘temp_data’ contents recursively, and it is this process which appears to be throwing up the error for you.

I’ve just completed a clean install on a self-build emonbase and it created the archive fine, with no errors whatsoever, so I’m struggling to replicate your issue. It’s almost like you’ve run out of storage, yet I can see that you’ve got over 3Gb of free storage in your installation partition.

Perhaps one of the more experienced programmers - @nchaveiro @TrystanLea or @glyn.hudson have ideas why this should work fine in a emonbase but errors on a emonpi.

Paul

Could @Jon be running out of space on the /tmp partition? This is mounted as a 30Mb tmpfs partition on the emonPi/emonBase emonSD-03-May16

pi@emonpi:~ $ df -h

Filesystem Size Used Avail Use% Mounted on

/dev/root 3.4G 2.3G 948M 72% /

devtmpfs 483M 0 483M 0% /dev

tmpfs 487M 0 487M 0% /dev/shm

tmpfs 487M 37M 450M 8% /run

tmpfs 5.0M 4.0K 5.0M 1% /run/lock

tmpfs 487M 0 487M 0% /sys/fs/cgroup

tmpfs 40M 6.6M 34M 17% /var/lib/openhab

tmpfs 1.0M 8.0K 1016K 1% /var/lib/dhcpcd5

tmpfs 1.0M 0 1.0M 0% /var/lib/dhcp

tmpfs 30M 40K 30M 1% /tmp

tmpfs 50M 8.2M 42M 17% /var/log

/dev/mmcblk0p1 60M 20M 41M 34% /boot

/dev/mmcblk0p3 3.6G 115M 3.3G 4% /home/pi/data

it looks like I am using only 1%. Will it jump up when running the backup.php script?

pi@emonpi:~ $ df -h

Filesystem Size Used Avail Use% Mounted on

/dev/root 3.4G 2.3G 963M 71% /

devtmpfs 483M 0 483M 0% /dev

tmpfs 487M 0 487M 0% /dev/shm

tmpfs 487M 50M 438M 11% /run

tmpfs 5.0M 4.0K 5.0M 1% /run/lock

tmpfs 487M 0 487M 0% /sys/fs/cgroup

tmpfs 40M 6.7M 34M 17% /var/lib/openhab

tmpfs 1.0M 4.0K 1020K 1% /var/lib/dhcpcd5

tmpfs 1.0M 0 1.0M 0% /var/lib/dhcp

tmpfs 50M 11M 40M 21% /var/log

tmpfs 30M 40K 30M 1% /tmp

/dev/mmcblk0p1 60M 20M 41M 34% /boot

/dev/mmcblk0p3 3.8G 593M 3.0G 17% /home/pi/data

pi@emonpi:~ $

I think that’s a typo Bill. The tmp volume should be mounted /tmp and not /var/tmp and is correct.

@Jon I would think that @glyn.hudson has identified the emonpi issue, as 30Mb is not a lot when you consider that Phar will create the archive in /tmp before writing it to disk.

Try increasing it in /etc/fstab to 50Mb, that should be sufficient.

Paul

EDIT - 50Mb? how wrong was I!! see below posts

Correct, sorry I meant /tmp

Since a tmpfs FS only uses what is actually necessary, i.e. the speciifed size is a maximum as opposed to an allocation why not specify say, 100MB?

e.g. this line: tmpfs 487M 0 487M 0% /sys/fs/cgroup

although it appears that 487MB is set aside, the actual amount is zero.

I changed the /tmp from 30M to 50M. And then to 100M and then to 230M.

pi@emonpi:~ $ cat /etc/fstab

tmpfs /tmp tmpfs nodev,nosuid,size=230M,mode=1777 0 0

pi@emonpi:~/data/myApps/dropbox-archive $ df -h

Filesystem Size Used Avail Use% Mounted on

tmpfs 230M 36K 230M 1% /tmp

Same errors for all /tmp sizes. I did a reboot after each change.

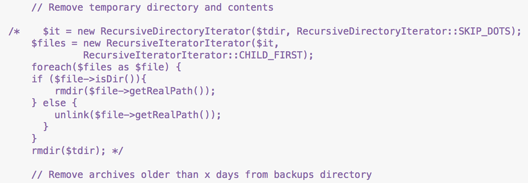

Just for fun I commented out the Remove temporary directory and contents from the backup.php:

The total temp_data directory is 130.1MB.

I modified my installation tonight to replicate /tmp being mounted in tmps the same as emonpi and with a 30Mb /tmp directory, I got the same error as you;

Dumping MYSQL data

Backing up node-red data

An error has occured, details:tar-based phar "/home/pi/dropbox-rchive/backups/archive.tar" cannot be created, header for file "mysql/emoncms_03-06-2016_191204.sql" could not be written

..Checking for expired archives...

My temp-data directory is 85Mb in size, yet suprisingly I had to increase the /tmp directory to just over 160Mb before PHAR could process the files correctly. Your temp_data directory is 130Mb so would probably need slightly more than 230Mb!

This wouldn’t be a problem for none ‘emonpi’ users, because /tmp would normally be written to disk and not in RAM.

Removing the ‘maximum’ size constraint for /tmp in fstab would allow the OS to manage memory usage dynamically (as @Bill.Thomson has eluded to above), increasing and shrinking the allocation depending upon demand.

tmpfs /tmp tmpfs nodev,nosuid,mode=1777 0 0

RAM usage with the constraint removed;

Before PHAR is called -

tmpfs 463M 4.0K 463M 1% /tmp

When PHAR is creating the archive (takes less than a second) -

tmpfs 463M 160M 304M 35% /tmp

After PHAR has finished -

tmpfs 463M 4.0K 463M 1% /tmp

Paul

Paul - That worked for the most part

The backup script ran with no errors! But it seemed to bring the Pi 2B to a near halt. From the Now compressing archive… step to the very end of the script I could not enter any commands. And top would not update (set to update every 2 seconds). It also set off the Node-RED watchdog (20 seconds) that looks for emonhub/rx/5 values from the emonPi. Can the compression code be told to play nice?

EDIT: digging thru the data I did not drop any data points. So maybe this just slowed the MQTT side of things…